My Hunt To Make Use of AI

How I Spent a Ton Of Time Trying to Save Time

TABLE OF CONTENTS

My intro to generative AI came while I was working on my animated Youtube series, “Dan Vs. Himself.”

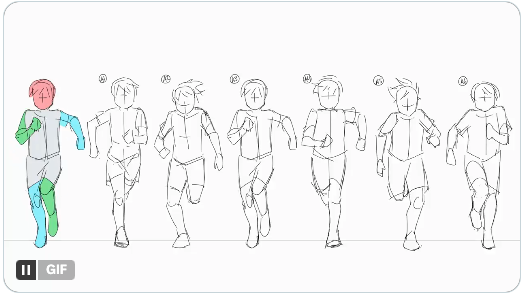

Instead of drawing frame by frame animation like Disney or other people who have money and/or time, I use Adobe Character Animator, which rigs characters like puppets and makes it much easier to have the characters talk, walk, etc.

However, as simple as my animations are, they can still be very time consuming to create so I hoped I could use some of the newer AI apps out there to help me create my next animation and make my creation process more efficient.

WRITING WITH AI

CHAT GPT AS A WRITER

I started by asking Chat GPT to write a one page sketch from scratch and provided Chat with a logline for what I had in mind. If you’re curious about the details, here is the transcript.

I had low expectations which led me to be impressed that Chat not only knew how to format a script but also how to generate a script that mostly made sense and even added ideas I hadn’t previously thought of (Disclaimer: I’d spent about ten minutes tops thinking of ideas for the script before resorting to Chat).

I continued to give Chat directions from there (i.e. make this part funnier, end it with the characters looking unhappy, etc). Each iteration felt better, but eventually I still didn’t feel like I had enough for a sketch I liked so I tried a different approach.

CHAT GPT THE PUNCH-UP WRITER

Next I plugged in an already drafted script (the script for the meditation guru short featured above) and asked Chat to make it “funnier.” Here’s the transcript of how it turned out.

I went back and forth, questioning which lines and directions were funnier, mine or Chat’s. The process felt similar to when I share my script for feedback with humans and get all sorts of suggestions. The advice can be simultaneously eye-opening, exhilarating, and paralyzing.

I eventually edited two versions and recorded them both. Maybe because my lack of acting chops or because it didn’t feel authentic, I wasn’t able to pull off most of Chat’s suggested lines and decided to mostly stick with my original script.

After creating a draft of the animation, however, I asked a friend for punch-up ideas on the video and he suggested some very similar lines to the ones Chat had originally suggested.

Edit: Over a year later, ChatGPT has improved in many ways and I’ve also learned about other programs like Google Gemini and Claude that also have the capability to create scripts. Claude, it seems, might be able to do it more confidentially.

IMAGE GENERATION

MIDJOURNEY

I first tested Midjourney to see if it could create some background images for my animations.

Midjourney had me sign into Discord where I saw hundreds (thousands? millions?) of others generating tons of unbelievable paintings, cartoons, photos, etc.

All they had to do was type “/imagine prompt” and then some keywords and images like the one below would appear within seconds. For instance, below was something like “reimagine Cersei Lannister in Russia.”

For the Meditation Guru video backgrounds, I plugged in:

“/imagine prompt: large empty beach, Rick and Morty cartoon style”

and got the following images:

From there I clicked an “up-res” button below to raise the resolution on the lower third picture and then right clicked it to download for use. I didn’t really play too much with adjusting the prompts for these backgrounds.

Also, I’ve since found other programs to help up-res the images and sometimes footage, including:

You can also use Photoshop’s 2.0 Upscale feature to up-res images to some extent

I was curious how easy it was to generate art with my style. To help create one particular visual joke, I uploaded my Dan character to discord and then asked Mid Journey to have the character hold a skull and look at it like Hamlet (in the position of the photo to the right).

Below are some of the results:

ADOBE FIREFLY

Recently, I also tried working within Adobe Firefly to see if I could use it to update my simple scaled down drawings and update them with my fuller style.

Adobe Firefly allows you to upload an image to generate the setup of the composition and you can follow that guide as much or as little as you want based on the strength you choose. I placed my scaled down image here.

It also allows you to upload an image to determine the style of the composition. And I uploaded my colored in, slightly more detailed “Dan” character there.

You can also choose from a gallery of composition and style examples that they have.

Below are some of the results I got while playing with the settings and text prompts…

For an upcoming series, I was commissioned to depict a construction accident the main subject was involved in during 1972.

To help illustrate this event, I combined my skills in drawing, editing, and animation with generative AI to develop the video.

To the left is the final video.

AI GENERATIVE VIDEO

EDITING WITH AI

For editing podcasts I found, FireCut, which acts as an extension/plugin for Adobe Premiere (used to be for more) and allows one to:

- Remove all the long silences between words

- Remove filler words (i.e. ums, ahs, etc)

- Add dynamic animated captions

- Incorporate b-roll

- Auto switch the camera for multi-cam sequences so that the person who is speaking is almost always on camera (except for a few cut-away shots)

- Choose highlights for good social cuts

- And more…

- All within Premiere

Capcut offers some helpful tools, even in the free version.

The negative for me was that I had to bring the footage out of premiere and into Capcut, but if you don’t have other editing software, this option might work great for you. They also offer options to remove silences and filler words, add captions, and you can even type in a script that will generate a full edited video of stock images.

Runway also offers a bunch of cool features (below) including the ability to generate video and a new feature called ACT ONE where you can convert your own video of you talking into anything from a talking 3D Animated character to a talking knight in shining armor with a castle in the background.

AI AUDIO TOOLS

ADOBE PODCAST

Remove noise and improve audio, even when recorded from a distance on smart phone or computer.

ELEVEN LABS

One of many deepfake audio programs. Create:

-Sample voice-over narration

-Animated character voices

-Upload a 30 second sample of real people (i.e. Morgan Freeman) to create sample narration for a pitch or comedy.

PREMIERE AUDIO ENHANCE

Similar to the capabilities of Adobe Podcast, but more customization available and can stay within Adobe Premiere.

However, it has caused my computer to shut down in the past if I try to batch edit too many clips at once.

LALAL.AI

Allows you to separate audio from music in videos and tracks where they are baked together.

I haven’t gotten to check this one out, but I’ve heard it’s good.

AI MUSIC TOOLS

SOME THOUGHTS

All of these apps became collaborators in a way. I wouldn’t hire Chat to write my script, but I might use it brainstorm different directions to go or ways to punch up specific lines of dialogue. With both approaches, the more specific prompts I gave, the better the results were for me.

To some degree, Chat and the other apps provide some insight into how to be a better director. I have to know what I want the story to be about, what within the script I want to improve, and some idea of how I want to improve it, and how I want to show it. I need to have the vocabulary to articulate that as best I can, and the patience to sit with it until it becomes what I’m looking for. It’s not a one and done thing. I have to go through drafts with it the same way one would with other collaborators who are human.

I also have to be able to decipher between the feedback/creations I like from Chat and what I want to cut. I don’t want to just use the uncut scripts Chat delivers. For instance, when I plugged in the meditation guru script, Chat caught onto the comedy game I was playing and heightened it, but also played beats too many times and for too long for my taste.

AI AND SHAME

Some people feel like using AI is cheating, but for me I’ve been “cheating” for a long time. I grew up reading every Cliffs Notes I could from ninth grade through college and today I still find myself looking up summaries of books on Wikipedia, podcasts, and youtube. I also have started using AI to generate summaries of Youtube videos that were summarizing various books on efficiency and productivity.

The efficiency/cheating question has also shown up with my animations as well. Like I mentioned above, I’ve been using Adobe Character Animator to rig my animated characters like puppets and makes it much easier to have the characters talk, walk, etc.

Now that I see Runway’s Act One possibly taking it a step further, I’m interested in seeing what I can do with that.

A lot of this is helpful in making the animation process more efficient. So why does it feel wrong?

I can see how by focusing on the destination I’m denying myself in other ways. If I rely too heavily on Chat GPT and MidJourney then I may be less likely to develop new writing/drawing skills or my own unique style (if it’s possible to develop a truly unique style).

But is that true? Isn’t AI just another tool that could help me develop my own style?

And are Chat GPT and MidJourney even that different from things I’ve already been using?

Gmail already guesses what I want to write in half my emails.

Google can give me answers to anything, including how to handle my anxiety about AI.

Youtube offers tutorials explaining how to do everything from how to use Chat GPT to how to build my own version.

And filters in everything from Photoshop to Snapchat help you me with everything from look like avatar to make my face as smooth as a baby’s bottom.

Not to mention, I’m not the first person to screw with the animation process (or filmmaking or music making process for that matter). Long before me, people have been innovating how to create animation, let alone art.

For instance, in this article, Ben Ross explains how William Hanna and Joseph Barbera pushed the idea of “limited animation” to save time and money. They reused backgrounds and character movements rather than re-drawing them and would just move certain body parts, like arms or legs, and leave the rest of the shot static. As a result, they only needed a few thousand drawings per short, rather than the typical 20,000+ drawings.

If Hanna Barbera cut corners and created a lot of successful shows, I’m not an awful person, right?

As some friends pointed out to me recently, it depends on my purpose for these animated shorts. Am I just trying to get more views and subscribers? Am I trying to create hyper realistic artwork and super smooth animation or am I trying to develop my own style?

Am I trying to write award winning scripts or just make people laugh? Or am I trying to make silly sketches about my relationship with anxiety and the people in my life so others who might be having similar experiences don’t feel alone? Or am I just trying to have fun?

Some of these things definitely ring true, but still figuring it out. Still playing around more with AI to see how I can better use it (and not spend so much money in the meantime). Will share more when I do.